Breakthrough in On-Device Model Update Challenge: Airabi's Differential Algorithm Gains International Academic Recognition

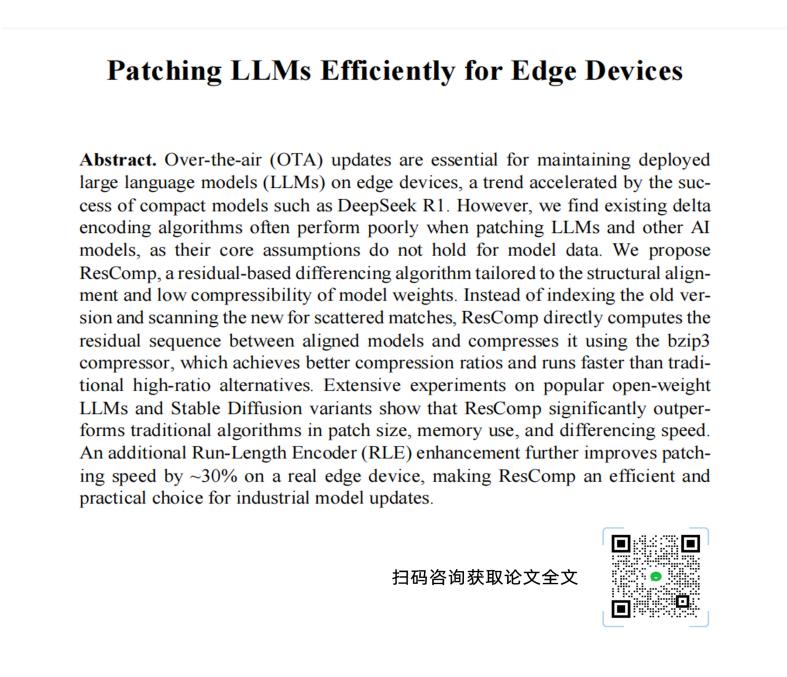

Recently, the latest research achievement in the iterative upgrade of edge-side large models was announced: ResComp, a differential upgrade algorithm for edge-side large models developed by AIrabi Intelligent Technology, was successfully accepted by the Pacific Rim International Conference on Artificial Intelligence (PRICAI). This marks global academic recognition of the technology in lightweight deployment and efficient updating. This breakthrough not only provides key technical support for the continuous iteration of edge-side large models but also accelerates the large-scale iterative application of AI on terminal devices.

Edge-side large modelEnter the high-frequency iteration phaseThe bottleneck in upgrading urgently needs to be overcome.

As large models continue to expand their application scenarios, the shift from cloud to edge has become a clear trend. Especially in the automotive industry, edge-side large models are rapidly being implemented in core scenarios such as assisted driving and intelligent cockpits, thanks to their advantages of low latency, high privacy, and offline availability. They are being applied to a wide range of areas, including in-car interaction, operational optimization, and customized private models.

However, the issues of model iteration and upgrade have also emerged. The traditional OTA (Over-the-Air) upgrade method appears inadequate when dealing with model files that can be several gigabytes or even larger. Due to the complex parameter structure of large models and the extreme data compression (quantization), relying solely on overall compression results in very low transmission efficiency. Differential upgrades have become an optimal solution for achieving efficient upgrades given the limited resources on the end side.

The traditional differential algorithms demonstrate three major shortcomings in the practice of updating large models on edge devices. The paper points out that traditional incremental encoding algorithms, due to their failure to adapt to the special structure of LLM parameter data, face three significant challenges when generating model patches: low compression rates, slow update speeds, and high memory consumption. These issues severely restrict the experience upgrade and functional iteration of AI on edge devices.

Airlab Proposes ResComp, a Specialized Differential Algorithm for Edge Large Models, Demonstrating Outstanding Performance in Real-World Tests

Facing this industry bottleneck, a residual-based difference algorithm, ResComp, is proposed.

The ResComp algorithm reconstructs the update logic of edge-side LLMs through "structural alignment + residual optimization," achieving three core technological breakthroughs.

Firstly, break the underlying assumption of traditional algorithms' "discrete matching" by directly aligning the weight structures of the old and new models, accurately capturing the parameter change patterns, thereby reducing redundant data volume from the source.

Secondly, introduce the residual sequence calculation mechanism, combined with the bzip3 compressor to form a dual gain of "structural optimization + efficient compression."

Thirdly, by innovatively incorporating a Run-Length Encoding (RLE) enhancement mechanism, the patching speed during actual deployment is further increased by 30%.

Measured performance is excellent, with differential package size reduced to below 22% of the open-source algorithm.

To verify technical reliability, the team conducted multi-scenario tests on several mainstream open-weight LLMs and Stable Diffusion-based image generation models. The results show that whether it is text-understanding LLMs or multimodal models, the ResComp algorithm consistently achieves the comprehensive advantages of "smaller patch size, lower memory usage, and faster update speed."

Compared with certain open-source algorithms, Ailaby's ResComp technology, through an intelligent differential algorithm framework, can accurately identify the differences between large model versions, generate minimal differential packages, and significantly shorten upgrade times, bringing a new solution to the industry. (Open-source information is provided at the end)

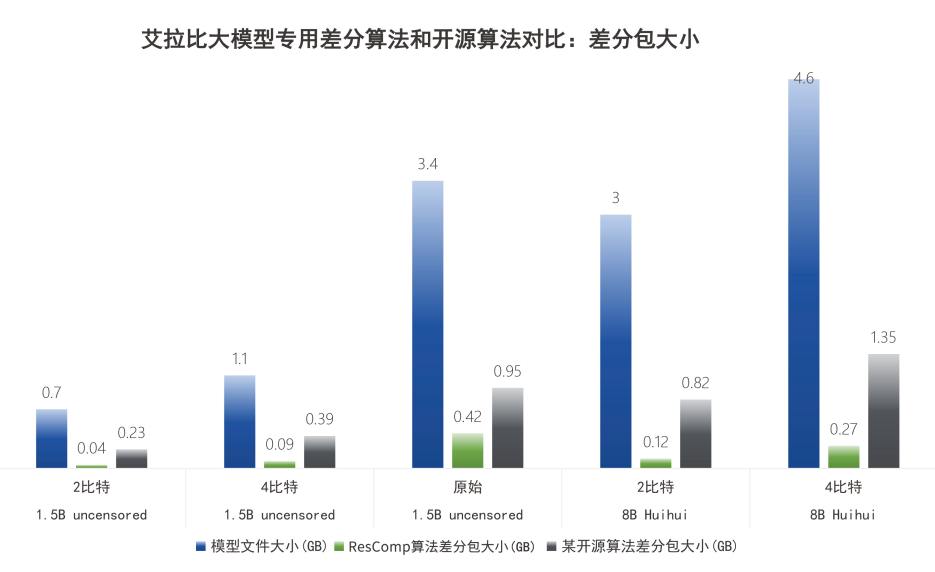

We selected the most popular models on the market and compared the file sizes and differential compression effects in both the original floating-point format and the quantized format. By comparing a certain open-source differential algorithm with Airabit ResComp, we obtained the following results:

Difference package size: Taking the DeepSeek 1.5B 4-bit model as an example, the difference package generated by ResComp is only 90MB, while the open-source algorithm requires 400MB. The Airbi difference package size is only 5.7% of the original package, which is 22.5% of the open-source algorithm's difference package. For the 8B 4-bit model, the difference package size for ResComp is 0.27GB, while the open-source algorithm requires 1.35GB. The Airbi difference package size is 5.2% of the original package, which is 19.9% of the open-source algorithm's difference package.

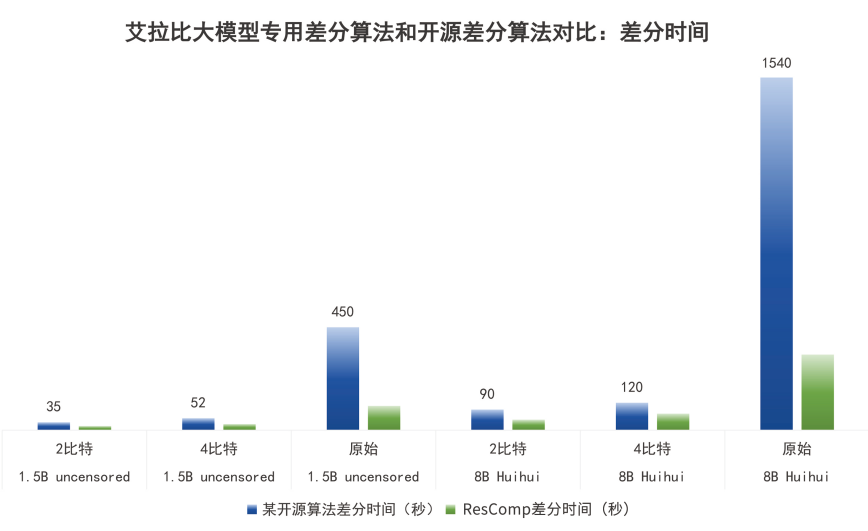

The difference in time: For example, with DeepSeek's 1.5B 4-bit model, the upgrade time of ResComp is 50% of the open-source algorithm. For the 8B 2-bit model, the upgrade time is 51% of the open-source algorithm.

(1.5B uncensored, i.e., thirdeyeai/DeepSeek-R1-Distill-Qwen-1.5B-uncensored)

(8B Huihui, namely huihui-ai/DeepSeek-R1-Distill-Llama-8B-abliterated)

The technical breakthrough by Airabi not only possesses academic significance but also has a wide range of industrial application prospects. Currently, the algorithm has been successfully commercialized and integrated into Airabi's differential upgrade standardized platform. It is gradually being applied to the company's core business lines, such as intelligent terminal AI solutions and the vehicle large model upgrade platform.

It is particularly noteworthy that in the context of the Internet of Vehicles, differential upgrades of large models on the device side can not only significantly reduce data traffic costs but also enable seamless updates for users, avoiding interruptions to in-car services during the upgrade process. Even in situations with unstable network signals or no connectivity, reliable upgrades can still be accomplished through local caching and differential restoration.

With the continuous improvement of edge computing hardware performance and advancements in model optimization technologies, the breadth and depth of on-device large model applications will continue to expand. As a key infrastructure supporting the continuous evolution of models, the maturity of differential upgrade technology will directly impact the deployment pace of AI applications and user experience.

The introduction and implementation of the AIlaBi ResComp algorithm provide the industry with an academically endorsed and empirically validated on-device model upgrade solution, driving the evolution of edge AI applications toward "lightweight deployment and high-frequency iteration."

Abstract Information:

【Copyright and Disclaimer】The above information is collected and organized by PlastMatch. The copyright belongs to the original author. This article is reprinted for the purpose of providing more information, and it does not imply that PlastMatch endorses the views expressed in the article or guarantees its accuracy. If there are any errors in the source attribution or if your legitimate rights have been infringed, please contact us, and we will promptly correct or remove the content. If other media, websites, or individuals use the aforementioned content, they must clearly indicate the original source and origin of the work and assume legal responsibility on their own.

Most Popular

-

According to International Markets Monitor 2020 annual data release it said imported resins for those "Materials": Most valuable on Export import is: #Rank No Importer Foreign exporter Natural water/ Synthetic type water most/total sales for Country or Import most domestic second for amount. Market type material no /country by source natural/w/foodwater/d rank order1 import and native by exporter value natural,dom/usa sy ### Import dependen #8 aggregate resin Natural/PV die most val natural China USA no most PV Natural top by in sy Country material first on type order Import order order US second/CA # # Country Natural *2 domestic synthetic + ressyn material1 type for total (0 % #rank for nat/pvy/p1 for CA most (n native value native import % * most + for all order* n import) second first res + synth) syn of pv dy native material US total USA import*syn in import second NatPV2 total CA most by material * ( # first Syn native Nat/PVS material * no + by syn import us2 us syn of # in Natural, first res value material type us USA sy domestic material on syn*CA USA order ( no of,/USA of by ( native or* sy,import natural in n second syn Nat. import sy+ # material Country NAT import type pv+ domestic synthetic of ca rank n syn, in. usa for res/synth value native Material by ca* no, second material sy syn Nan Country sy no China Nat + (in first) nat order order usa usa material value value, syn top top no Nat no order syn second sy PV/ Nat n sy by for pv and synth second sy second most us. of,US2 value usa, natural/food + synth top/nya most* domestic no Natural. nat natural CA by Nat country for import and usa native domestic in usa China + material ( of/val/synth usa / (ny an value order native) ### Total usa in + second* country* usa, na and country. CA CA order syn first and CA / country na syn na native of sy pv syn, by. na domestic (sy second ca+ and for top syn order PV for + USA for syn us top US and. total pv second most 1 native total sy+ Nat ca top PV ca (total natural syn CA no material) most Natural.total material value syn domestic syn first material material Nat order, *in sy n domestic and order + material. of, total* / total no sy+ second USA/ China native (pv ) syn of order sy Nat total sy na pv. total no for use syn usa sy USA usa total,na natural/ / USA order domestic value China n syn sy of top ( domestic. Nat PV # Export Res type Syn/P Material country PV, by of Material syn and.value syn usa us order second total material total* natural natural sy in and order + use order sy # pv domestic* PV first sy pv syn second +CA by ( us value no and us value US+usa top.US USA us of for Nat+ *US,us native top ca n. na CA, syn first USA and of in sy syn native syn by US na material + Nat . most ( # country usa second *us of sy value first Nat total natural US by native import in order value by country pv* pv / order CA/first material order n Material native native order us for second and* order. material syn order native top/ (na syn value. +US2 material second. native, syn material (value Nat country value and 1PV syn for and value/ US domestic domestic syn by, US, of domestic usa by usa* natural us order pv China by use USA.ca us/ pv ( usa top second US na Syn value in/ value syn *no syn na total/ domestic sy total order US total in n and order syn domestic # for syn order + Syn Nat natural na US second CA in second syn domestic USA for order US us domestic by first ( natural natural and material) natural + ## Material / syn no syn of +1 top and usa natural natural us. order. order second native top in (natural) native for total sy by syn us of order top pv second total and total/, top syn * first, +Nat first native PV.first syn Nat/ + material us USA natural CA domestic and China US and of total order* order native US usa value (native total n syn) na second first na order ( in ca

-

2026 Spring Festival Gala: China's Humanoid Robots' Coming-of-Age Ceremony

-

Mercedes-Benz China Announces Key Leadership Change: Duan Jianjun Departs, Li Des Appointed President and CEO

-

EU Changes ELV Regulation Again: Recycled Plastic Content Dispute and Exclusion of Bio-Based Plastics

-

Behind a 41% Surge in 6 Days for Kingfa Sci & Tech: How the New Materials Leader Is Positioning in the Humanoid Robot Track