Embodied intelligence enters "moment of enlightenment"

At the end of 2025, the embodied intelligence field is particularly lively.

On December 18th, SenseTime's subsidiary, DaXiao Robotics, just released Enlightenment World Model 3.0 (Kairos 3.0)Ten days ago, Horizon Robotics Lab showcased an embodied intelligence simulation data engine; earlier, at the World Robot Conference, UTree Technology's CEO Wang Xingxing bluntly stated that "model issues are more critical than data issues."

These developments outline an industry consensus: embodied intelligence is shifting from a hardware race to a "brain" upgrade, with world models becoming the key to breaking the deadlock.

Why does it have to be a world model?

If AI 1.0 is the "slow era" of manual labeling, and AI 2.0 is the "fast era" of large language models, then the era of embodied intelligence is the "challenging era" of data gaps.

The essence of embodied intelligence is to enable machines to autonomously interact and solve complex tasks in the physical world, and all of this is premised on the support of massive and high-quality data. However, the reality is that the industry is facing a cliff-like gap in data scale.

Compared to the massive data of autonomous driving cars, which often have hundreds of billions of parameters and nearly a thousand TOPS of computing power, the embodied intelligence in its initial mass production stage has a data scale of only 100,000 hours, with a gap of up to 40 times. Moreover, the multimodal perception in embodied intelligence application scenarios is richer, the execution of actions is more complex, and the sensor data is more detailed, making the difficulty and cost of data collection far exceed those of previous AI technologies.

The traditional R&D path is already overwhelmed. In the "machine-centered" R&D paradigm, data collection is considered a costly and labor-intensive task: the hardware cost of a single robot can easily reach hundreds of thousands, and collectors need to be on duty around the clock, remotely operating the real machine to repeat the same actions thousands of times to collect a set of usable data. What's more critical is that the data collected in this way is severely tied to specific hardware—grasping skills trained for the A-model robotic arm cannot be directly applied to the B-model, and "intelligence" is confined within a single shell, becoming a non-transferable "exclusive skill."

SenseTime's co-founder, executive director, and chairman of Daxiao Robotics frankly stated in an interview, "This approach essentially requires humans to adapt to machines, with high data collection costs and low efficiency, making it completely unsustainable for the industry to develop towards scalability and generalization."

Another purely visual learning path, highly anticipated by Tesla and Figure AI, is also encountering difficulties. This model attempts to enable robots to "imitate" human actions by watching a vast number of videos, but it lacks an underlying understanding of the three-dimensional physical world. Robots can see people "picking up a cup," but they cannot comprehend the cup's weight, material, or the core intention behind the action of "drinking water." This learning method, which lacks physical laws and causal logic, presents an insurmountable "reality gap," causing the actions learned from videos to easily "fail" when applied to real-world scenarios.

The essential dilemma of these two paths is that the former "makes things difficult for people," while the latter "makes things difficult for machines," with the core issue being a failure to understand the interaction patterns between humans and the world. The emergence of world models aims to fill this gap.

Embodied intelligence is not simply about making robots "move," but rather enabling them to truly understand the laws of the physical world, to think and act like humans. Therefore, for embodied intelligence, world models are not a "multiple-choice question," but a "required answer." Without the support of a world model, robots will always remain as "tools executing commands"; however, with a world model, robots can truly possess a "brain that understands the world," transitioning from "passive response" to "active exploration."

The underlying logic of SenseTime's development of the world model

As a company that has been deeply involved in the AI field for 11 years, SenseTime has a unique understanding of AI and is persistently committed to perfecting the construction of its technical system.

The first step for the Daxiao robot is to propose the "Human-centric" ACE embodied research and development paradigm, which builds a comprehensive technological system from "environmental data collection - Enlightened World Model 3.0 - embodied interaction."

Image Source: Daxiao Robot

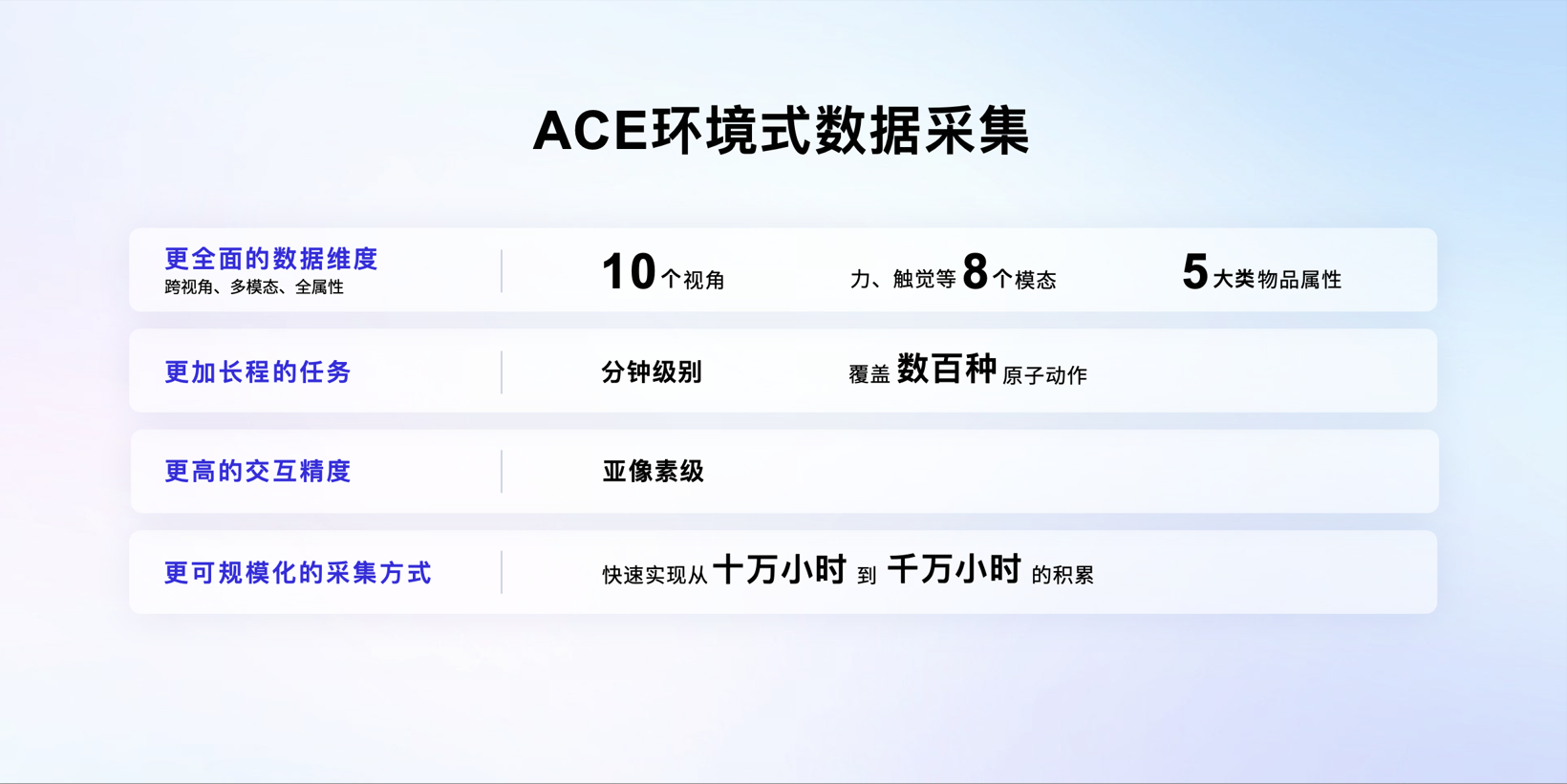

A "human-centered" efficient and high-quality data collection model has been constructed using first-person perspective data combined with third-person perspective collection methods, achieving four major breakthroughs: data dimensions covering more than 10 perspectives, 8 modalities, and 4 categories of object attributes, allowing robots to "see, feel, and understand"; task duration reaching minute-level, supporting complex tasks with hundreds of atomic actions, such as the entire process from "opening the refrigerator—taking out the drink—unscrewing the bottle cap—handing it over"; interaction precision reaching sub-pixel level, capable of accurately tracking fine operations such as grabbing eggs and placing tableware; and collection efficiency achieving scalability, rapidly completing massive data accumulation from hundreds of thousands to tens of millions of hours.

Image Source: Daxiao Robot

In the data processing phase, SenseTime transforms the collected raw data of "human-object-environment" into dynamic scene data that can be directly used for model training through three major technologies: temporal consistency alignment, interactive dynamic trajectory prediction modeling, and physical correctness simulation calibration.

Image Source: Daxiao Robot

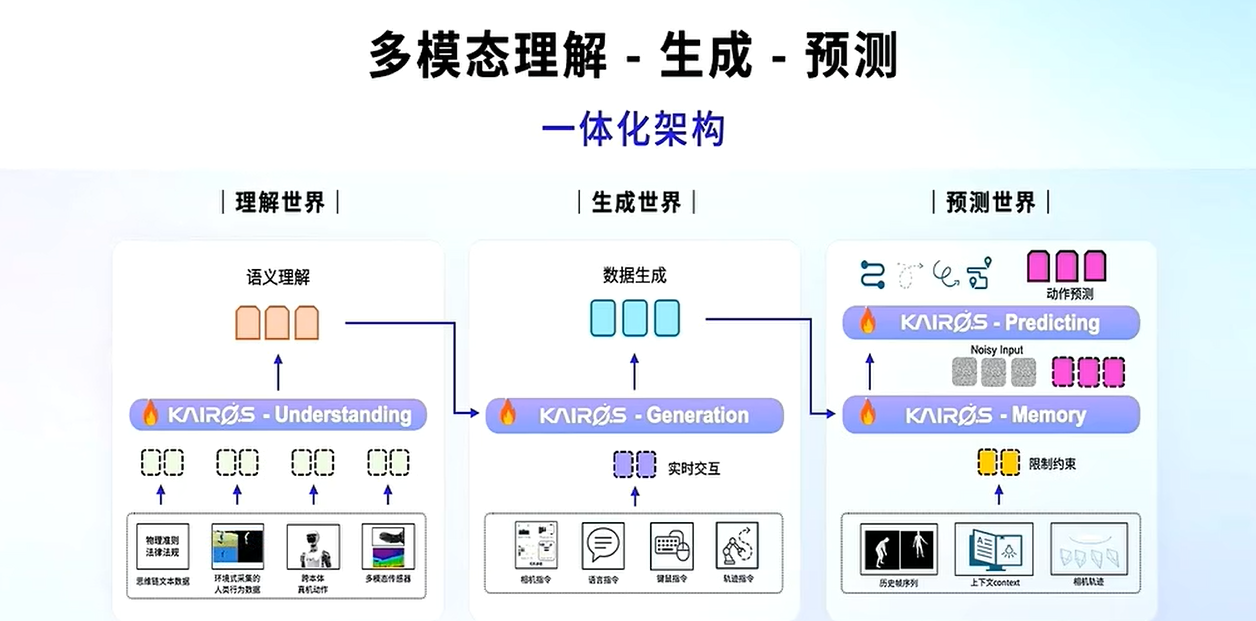

In this research paradigm, ambient data collection can achieve tens of millions of hours of data collection per year. The Enlightenment World Model 3.0 serves as the "brain," integrating physical laws, human behavior, and real machine actions, enabling machines not only to understand the world but also to generate long-term dynamic and static interactive scenes and predict possible outcomes of different actions.

The ecological synergy of the entire industry chain further amplifies SenseTime's technological advantages. In the chip sector, the Enlightenment World Model 3.0 has been adapted to several domestic chips from companies such as MuXi Shares, Biren Technology, Sugon, Huixi Intelligent, and Yingwei Innovation. This adaptation not only significantly enhances chip performance but also establishes a "model-chip" synergistic ecosystem.

"In the past, it was difficult for domestic chips to adapt to models because the models were not open-source and the chip architecture was not transparent, leading to extremely high communication costs between both parties. Now that we have open-sourced the models, chip manufacturers can directly optimize algorithms based on the models, significantly increasing efficiency." Wang Xiaogang said.

At the same time, Daxiao Robotics has further launched the Embodied Super Brain Module A1 and is collaborating with industry partners to build an embodied intelligence innovation ecosystem. This accelerates the commercialization of robotics and advances the value of the embodied intelligence industry.

Based on the leading pure vision map-free end-to-end VLA model advantages of the Daxiao Robot team, the robot dog equipped with the embodied super brain module A1 can adapt to complex, dynamic, and unfamiliar environments without pre-mapping high-precision maps. Relying on the model's visual understanding and motion planning capabilities, the robot can achieve robust, safe, and reasonable path generation in dynamic environments, truly realizing "autonomous action."

Based on the advantages of the DaXiao robot's purely visual, no-map, end-to-end VLA model, the robotic dog equipped with the embodied super brain module A1 can adapt to complex, dynamic, and unfamiliar environments without pre-mapping high-precision maps. Relying on the model's visual understanding and motion planning capabilities, the robot can achieve robust, safe, and reasonable path generation in dynamic environments, truly realizing "autonomous action."

Furthermore, Daxiao Robotics combines the Insta360 panoramic sensing solution with the SenseTime Ark general vision platform to build a comprehensive, high-precision environmental sensing system. It not only covers more than 10 industries but also adapts to over 150 intelligent application scenarios, ranging from daily behavior analysis to special risk warning to meet all demands. "Ten years ago, we integrated stationary cameras into the Ark platform; today, we are integrating mobile robots into the Ark platform. The underlying application challenges are actually similar," said Wang Xiaogang.

In the hardware field, Daxiao Robotics is deeply partnered with Insta360, Wolong Electric, and Pasinee, relying on the perception and other types of hardware from hardware manufacturers to enhance the information collection capabilities of world models and module products for multi-perspective and dynamic scenes.

Image Source: DaXiao Robot

In terms of cloud services and data, Daxiao Robot collaborates with platforms such as SenseTime Big Device, Tencent Cloud, Volcano Engine, SenseTime Ark, and Suanfeng Information to build a full-process support system. By leveraging the computing power scheduling capabilities of cloud service providers, it reduces the research and development costs for small and medium-sized enterprises. Relying on the data resources of Coupas and China Southwest Architectural Design and Research Institute, it continuously optimizes the scene generalization capability of the world model, enabling solutions to quickly adapt to the personalized needs of different industries.

Nowadays, in the field of embodied ontology, Daxiao has partnered with leading companies such as Zhiyuan Robotics, Galaxy General, Titanium Tiger Robotics, and the National Geospatial Center to integrate new technological paradigms, world models, and robotics hardware adaptation chains, jointly creating solutions suitable for different scenarios. This accumulation of scenarios enables the Enlightenment World Model 3.0 to quickly adapt to the needs of different industries.

This combination of "technology + scenario + ecology" makes the Kaiwu World Model 3.0 not only a technological product but also a core engine driving the development of the embodied intelligence industry.

Embodied intelligence is just beginning, open source breakthrough.

"Today's world model is just a starting point," Wang Xiaogang admitted at the media briefing. "The scenarios we can access ourselves are limited. Only by involving developers from around the world can we enable the world model to cover more vertical scenarios," Wang Xiaogang admitted.

And this is precisely the core logic of Daxiao Robot's open-source Enlightenment World Model 3.0.

The balance between open source and commercialization in the AI field has always been a focus of controversy. SenseTime's choice requires not only technical confidence but also a profound understanding of the rules of industrial development.

The open-source release of Enlightenment World Model 3.0 has first addressed the industry pain point of insufficient scene coverage. The application scenarios of embodied intelligence are infinitely diverse—from household services to industrial production, from medical care to logistics and warehousing, each scenario has vastly different requirements. No single company, no matter how strong, can exhaustively collect data and optimize models for all scenarios.

To lower the threshold for developers, SenseTime has simultaneously launched the Enlightenment Embodied Intelligence World Model Product Platform. This platform integrates multimodal generation capabilities such as "Text-to-World, Image-driven World, Trace-shaped World," featuring 11 major categories, 54 subcategories, and a total of 328 tags, covering 115 vertical embodied scenarios.

Developers only need to upload an image and enter a natural language command to quickly generate visual task simulation content. For example, by entering "robot sorting apples," the platform can automatically generate training data that includes the physical properties of apples, sorting motion trajectories, and environmental interaction logic. More importantly, developers can share their created data and models on the platform, forming a positive cycle of "creation-sharing-iteration."

In an era where NVIDIA chips dominate AI training, model adaptation requires little concern—most models can run smoothly on NVIDIA chips. However, domestic chips face the challenges of diverse architectures and underdeveloped ecosystems, necessitating significant R&D resources for a model to adapt to multiple domestic chips. "If the model is not open source, chip manufacturers are unaware of the model's underlying logic, making optimization akin to 'blind men touching an elephant.' If the model is open source, chip manufacturers can directly optimize hardware architecture and algorithms according to the model's computational needs, significantly improving efficiency," explained Wang Xiaogang.

More importantly, open source is reshaping the competitive landscape of the embodied intelligence industry. In the past, industry competition was more about "building behind closed doors," with companies accumulating data and developing models independently, forming technological barriers. Open source shifts industry competition from "single-point technology contests" to "ecosystem collaboration contests."

The future competition is not between individual companies, but between ecosystems. Whoever can build a more open and prosperous ecosystem will dominate in the era of embodied intelligence.

From redefining data collection with the ACE paradigm to reconstructing machine cognition with world models, and reshaping industry ecosystems with open-source strategies, Daxiao Robotics' series of initiatives are injecting new vitality into the industry. However, the real challenges are just beginning. How to transform technological advantages into commercial value, achieve large-scale implementation in different scenarios, and build a sustainable business model are all issues that the industry needs to address collectively.

【Copyright and Disclaimer】The above information is collected and organized by PlastMatch. The copyright belongs to the original author. This article is reprinted for the purpose of providing more information, and it does not imply that PlastMatch endorses the views expressed in the article or guarantees its accuracy. If there are any errors in the source attribution or if your legitimate rights have been infringed, please contact us, and we will promptly correct or remove the content. If other media, websites, or individuals use the aforementioned content, they must clearly indicate the original source and origin of the work and assume legal responsibility on their own.

Most Popular

-

Key Players: The 10 Most Critical Publicly Listed Companies in Solid-State Battery Raw Materials

-

Vioneo Abandons €1.5 Billion Antwerp Project, First Commercial Green Polyolefin Plant Relocates to China

-

EU Changes ELV Regulation Again: Recycled Plastic Content Dispute and Exclusion of Bio-Based Plastics

-

Clariant's CATOFIN™ Catalyst and CLARITY™ Platform Drive Dual-Engine Performance

-

List Released! Mexico Announces 50% Tariff On 1,371 China Product Categories